A Design Fiction in which algorithms are regulated, akin to how the government oversees other aspects of public safety, to mitigate their negative impacts while harnessing their potential for positive change.

Contributed By: Julian Bleecker

Published On: Thursday, January 21, 2021 at 13:43:34 PST

- The Issue

Last issue I got all hopped-up by the pandemonium at The Capitol that went down on January 6th. In the aftermath I was driven to make an argument (it might’ve been a polemic give its tone) as to why the Algorithm as it is presently constructed is not actually servicing us in a positive, life-affirming, up-and-to-the-right kind of way.

You know the point, but it bears repeating: the Algorithm is tuned for outcomes that produce engagement without really caring what that engagement might be. A crude formulation as to its goals or purpose, but that seems to be the consensus as to what it is and why it exists, near as I can discern.

At the same time and in the same breathless breath, I was trying to come to grips with my own professional lived reality which is to say that, hey, I’m a product designing technology guy. I’m an engineer. I program the computer. I design electronic circuits. I built a company that does the same. I design, build, make things and love the satisfaction that comes with solving a good, creative problem by imbuing intent, values, possibility and meaning into things people use through code and hardware.

I’m not ‘anti-technology’ by any stretch of the imagination. Anti-technology doesn’t make any sense to me — that’d be a bit like being anti-money or anti-sardines, or anti-breakfast.

Money, sardines, breakfast, algorithms, technology — these things are here and billions of people enroll these things into their normal, human rituals and routines and the overwhelming majority of people believe in these things because sharing beliefs of things that sustain our ambitions and pursuits feels better than the alternatives.

Rather than getting rid of, say, breakfast or, more to the point, technology, let’s become better, more thoughtful, more concerned engineers, designers, technologists, product marketers, ‘cereal entrepreneurs’ (🤷🏽♂️) and so forth. One does that by leading the work with a more thorough consideration of the implications, unintended consequences, pitfalls and unexpected opportunities.

Not just ‘consequences’ in the technical, first-order sense as in — ‘putting the blue button there may make it difficult for left-handed people to reach it while holding the nozzle.’ But also, the implications and consequences outside the formal interaction architecture by asking what happens between that hypothetical blue button and continued exposure to the Algorithm behind the blue button, just for one example.

- The Design Fiction

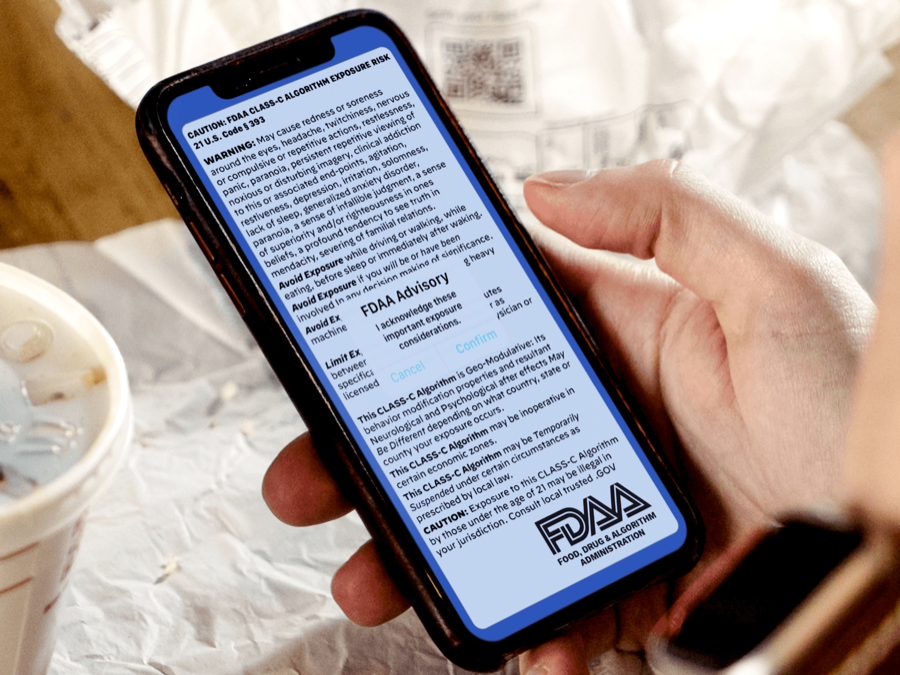

And so as a kind of redeeming conclusion to my writing as a form of self-exorcism of that horrible day, I did a Design Fiction that mashed up a possible near future in which Algorithms are somehow and to some degree regulated, which I suspect (not a prediction) is coming sooner rather than later.

That Design Fiction was a quite partial sketch of a thought that, in a larger Design Fiction project would serve as ‘stimulus’ for a further deep-dive in and around the topic of Algorithms as social actors. In the meantime, those images would go up on a wall to ponder and be reminders of the immeasurable and latent influence of the Algorithm.

I quickly produced those images and some text, sitting here alone in the studio brainstorming about what might be some of the residual exhaust in the form of some mundane artifacts of such a fictional near future effort. Rather than just postulating and stating somewhere that ‘there will be regulation’, or ‘I think we need to have some kind of managed, third-party assessor of the fitness of Algorithms for public consumption’ I produced a set of images from that future to help imagine one of many possibilities by which Algorithms are more fully socialized social actors in some near future world.

The image is key — a visual representation, literally. Or an image of an artifact materialized. Something more than just text.

In the Design Fiction mode, the image serves two functions.

1. First, to visualize the imagination, which is to say — to give it some substance and acuity.

2. Second to move things from the imagination-question: **‘What If..’** to the Design Fiction statement: **‘As If..’**. Design Fiction prototypes near future possibilities through these ‘As If..’ instantiations. That is, Design Fiction simulates near future possibilities.It’s an important semantic maneuvering going on here. We are answering a decently framed ‘What If..’ question with an ‘As If..’ statement.

The notion of ‘What If..there was Algorithm regulation’ goes through this semantic maneuver to construct a representation of a moment of a near future design fictional world ‘as If..’ What?

Oh yeah — got it: a world ‘As If..Algorithms were regulated.’

Sometimes those ‘As If..’ prototypes start with images such as I represented in the last issue of the newsletter. As much as possible things can be moved along to physically building things, even with functional characteristics, even as they may seem to be imaginary instantiations of absurd or unlikely outcomes. This is because it is in the making that meaning, comprehension and understanding of what is imagined is generated. Without the making (or more to the point, the hands-on designing), there is less generative starter material. Without making the imagination gets stuck in itself.

- The Implications of the Design Fiction

So the recap of that tiny Design Fiction: what I imagined is a government agency assuming responsibility for helping manage Algorithms in a way similar to how baby’s toys, house paint, gasoline, motorcycle brakes, pillow stuffing and pet food is managed.

In this Design Fiction, the presumption evoked by the artifacts I created is that some sort of bureaucracy intercedes, perhaps and most likely because the relevant commercial entities that make these Algorithms are not incentivized sufficiently to consider or protect their customers’ well — let’s just say their customers’ psyches.

The typical commercial ‘business operating system’ says nothing about creating these sorts of considerations or protections, really. (Maybe something is codified on the ‘About’ page of the company website where there is some earnest mention as to how their Algorithms are ‘Made with love in Dog Patch’ or ‘we care about your privacy and will never do such-and-so’. Nevertheless..)

In this Design Fictional near future, something like the U.S. Food and Drug Administration is given this responsibility under a new mandate that includes Algorithms — such that it becomes the U.S. Food, Drug and Algorithm Administration.

###4. In The Matter of Everalbum and Paravision

Now, I mention all of this so I can share with you an item I stumbled across yesterday. It’s a piece on the One-Zero channel over on Medium — The FTC Forced a Misbehaving A.I. Company to Delete Its Algorithm | by Dave Gershgorn | Jan, 2021 | OneZero.

It is an article on a recent Federal Trace Commission consent ruling regarding an Algorithm that was found to be badly misbehaving. It was ruled that this particular Algorithm — a Class-B Facial Recognizer — was to be ‘destroyed’ within 90 days of the issuance of the finding.

It’s worth reading the actual ruling in its entirety — it’s a succinct 8 page legal document.

Why is it worth it?

In this particular example, the legal document represents an Algorithm as subject to legal remedies for doing something that is not in the best interests of the company’s customers. In fact, the remedy against the Algorithm in this case is that its humans have 90 days to destroy it, and affirm such was done, under penalty of perjury — if its humans don’t destroy it, they can get sent to the pokey. Real-deal, real-world consequences. No one wants to exchange their Japanese selvedge denim for an orange jump suit. Not really.

A California-based developer of a photo app has settled Federal Trade Commission allegations that it deceived consumers about its use of facial recognition technology and its retention of the photos and videos of users who deactivated their accounts… The proposed order also requires the company to delete models and algorithms it developed by using the photos and videos uploaded by its users. “Using facial recognition, companies can turn photos of your loved ones into sensitive biometric data,” Andrew Smith, Director of the FTC’s Bureau of Consumer Protection, said. “Ensuring that companies keep their promises to customers about how they use and handle biometric data will continue to be a high priority for the FTC.”

It’s also worth reading the Commissioner’s formal statement on the matter, which clearly implies the desire for more direct measures to hold Algorithms and their humans accountable for their collaboration on practices that may be harmful to normal humans.

(By the way, that ‘Class-B Facial Recognizer’ up there? That’s a nudge of a scrap of a mundane future Design Fiction in which Algorithms obtain a kind of specificity and categorization of this sort, similar to managed and regulated categories of food additives, radio frequency bands, herbicides, and general aviation pilot’s licenses. It might sound like fussy-stuff, but categorization is a result of a more forensic level of comprehension as to what’s what. In other words, things that are regulated with the hopes of making the world better, safer, more habitable, and healthy. I say all of this while also acknowledging and recognizing the inevitable graft, collusion, and influence pedaling that goes along with regulatory agents but, heck, you take the good, balance it against the bad, and blow whistles on misbehaving actors. Susan Leigh Star and Geoffrey Bowker have what I consider the definitive text on the significance of classifying things in “Sorting Things Out: Classification and Its Consequences”.

- Why Design Fiction?

Images seed the imagination. An appreciation for this is what undergirds one of the reasons why, when I first outlined Design Fiction, I leaned heavily on images of other worlds and futures as seen in science-fiction cinema.

Anytime one hears that something is difficult to imagine — like wrangling Data and Algorithms — it’s generative to back up and image first in order to help the imagination along any of the many possible trajectories.

Recently I was podcast-told in a very anecdotal matter-of-fact way that Homo sapiens’ ability to imagine that which does not yet exist is a kind of super-power that can be credited with our continued existence. But, It’s not a given that this super-power is super-good. It needs exercise, like flabby muscles, another useful benefit of a daily regimen of farm-fresh Design Fiction.

This ability we have to imagine other possibilities, if it is a super power, also has its verso — a quite dark, ‘Mirror-Mirror’ anti-Spock that we saw on January 6th. Having the ability to imagine things as otherwise or to speculate that which does not yet exists also means we are highly susceptible to dangerous falsehoods — those dystopian Q-y otherworlds.

We are exceptionally hackable, the result of an evolutionary defect perhaps? Or an exploit that we haven’t patched in our brainware that the Algorithm turned to its purposes?

Perhaps this exploit has a positive side. I can imagine that there could be consumer-grade, store-bought algorithms. Certainly prescription algorithms that lead us away from crazytown, towards positively inflected perspectives. Maybe even 99¢ algorithms you pick up like you might a hot coffee and buttered biscuit. Maybe they are like a multivitamin for the psyche or an algorithm to talk to on the commute to get a bit tuned up for a hard day at the office. That could make for a fun Design Fiction.

Okay. Until next week. In the meantime, Live Long and Prosper. 🖖🏽