Heirloom Language Models

Many vintages. Sold ‘as-is’.

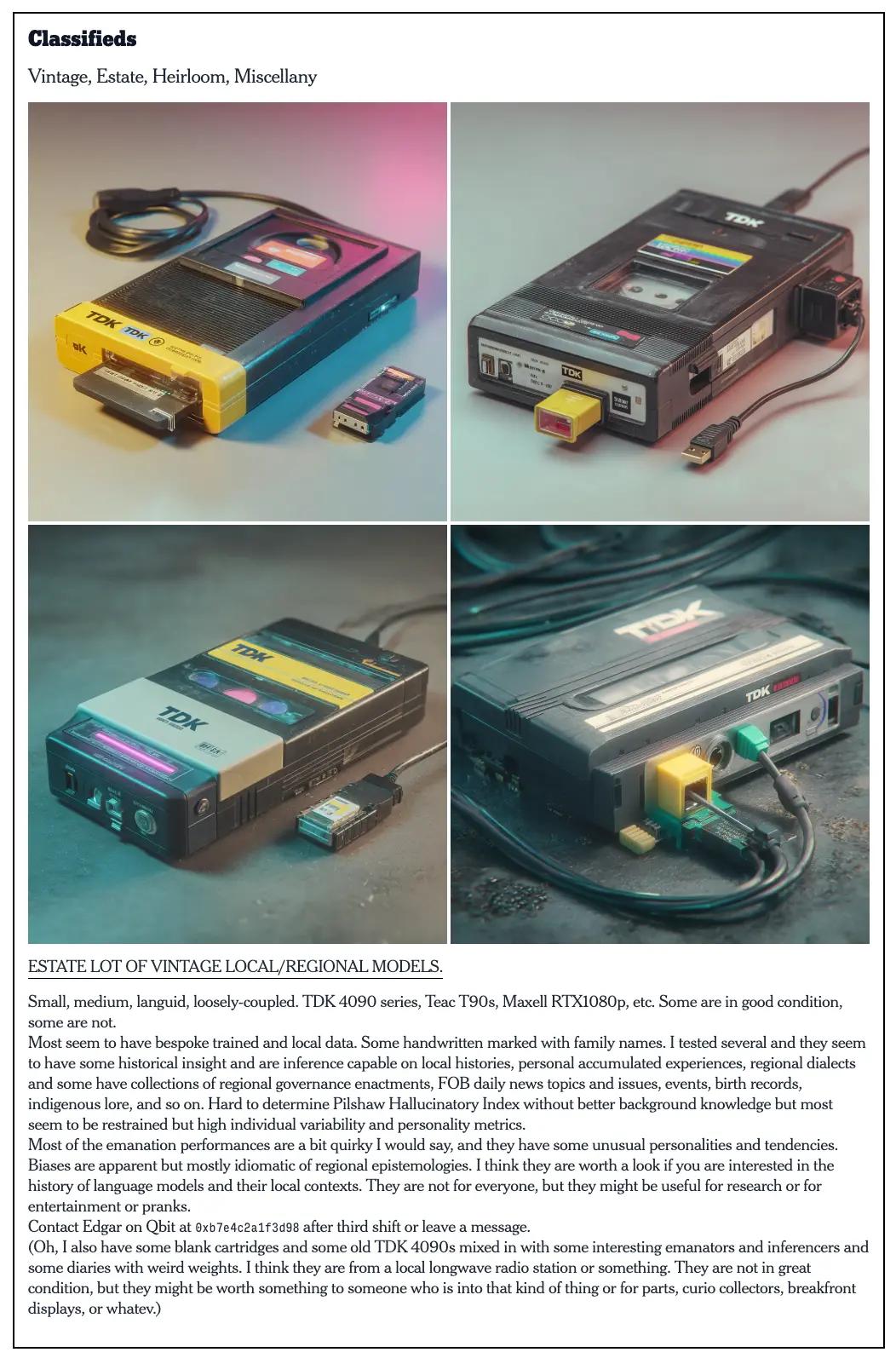

Classifieds

Vintage, Estate, Heirloom, Miscellany

Estate lot of vintage local/regional models.

Small, medium, languid, loosely-coupled.

TDK 4090 series, Teac T90s, Maxell RTX1080p, etc.

Some are in good condition, some are not. Most seem to have bespoke trained and local data. Some handwritten marked with family names. I tested several and they seem to have some historical insight and are inference capable on local histories, personal accumulated experiences, regional dialects and some have collections of regional governance enactments, FOB daily news topics and issues, events, birth records, indigenous lore, and so on. Hard to determine Pilshaw Hallucinatory Index without better background knowledge but most seem to be restrained but high individual variability and personality metrics.

Most of the emanation performances are a bit quirky I would say, and they have some unusual personalities and tendencies.

Biases are apparent but mostly idiomatic of regional epistemologies. I think they are worth a look if you are interested in the history of language models and their local contexts. They are not for everyone, but they might be useful for research or for entertainment or pranks.

Contact Edgar on Qbit at 0xb7e4c2a1f3d98 after third shift or leave a message.

(Oh, I also have some blank cartridges and some old TDK 4090s mixed in with some interesting emanators and inferencers and some diaries with weird weights. I think they are from a local longwave radio station or something. They are not in great condition, but they might be worth something to someone who is into that kind of thing or for parts, curio collectors, breakfront displays, or whatev.)

Published On: 2/5/25, 10:47

Author: Julian Bleecker

Applied Intelligence News Service Exclusive

What the heck's going on here? (Explainer)

There seems to be a lot of interest in the idea of local models, and the idea of having a model that is trained on your own data or the data of your community. Particularly as the corporate foundation models make it increasingly apparent that they will always have a bias, likely towards the interests of their owners, whether that be a corporation or a government. The idea of having a model that is trained on your own data or the data of your community is appealing, and it raises questions about what it means to have a model that is truly representative of your own experiences and knowledge. This is particularly relevant in the context of the recent discussions around the biases present in large language models. Like Grok going crazy every so often and slipping its moorings and going off on South Africa and white genocide, or Claude doing its Golden Gate Bridge thing.

But what does this mean in the context of, you know — bedrock knowledge? What happens with the notion of ‘common sense’ when epistemologies are derived from diverse knowledge bases? What happens when the knoweldge base is not a single, monolithic entity but a collection of potentially conflicting perspectives?

Nominalism considers that the world is made up of individual objects, and that these objects are the only things that exist. This means that there is no such thing as a universal truth or a single reality. Instead, each object has its own unique properties and characteristics, and these properties are what define the object. In this context, the idea of a local model becomes even more interesting. If each model is based on its own unique set of data and experiences, then it is possible that each model could have its own unique perspective on the world. This raises questions about what it means to have a model that is truly representative of your own experiences and knowledge.

Large language models are 'foundational' often seemingly simulating universals. They emanate generalized and averaged ‘truths’ and ‘common sense’ that are not necessarily representative of the experiences and knowledge of a specific community or individual — or the specific and particularities of a given context.

THe particular — local(ly trained) langugage model — doesn't travel very well to the general, as I think we've been learning over the last few years — likely acutely, but probably an effect that has been around for much longer and less noticed or not as acute.

I think the idea of nominalism that we're working on in the OWG is exposed by this speculation about what local language models (or this design fiction of Heirloom Language Models) might look like. The idea that each model is based on its own unique set of data and experiences, and that these models could have their own unique perspectives on the world, is curious — and I'm not sure what world it builds. It feels like they make apparent a world where its been accepted that truth, ways of reasoning, ways of knowing and — as I try to intimate here — even language(s) always have to be named and constructued froma particular vantage point, or particular perspective.

In tension this contrast between foundational and nominalistic fractures the illusion that foundational LLMs are just ‘mirrors of the world’. Rather they are seen as constructors of worlds or world-building — each with their own naming logic, their own epistemological commitments, typically coming from direct or indirect preferences, biases, or organizational/institutional interests.

But — now what? Nominalist approaches to knowledge and representation is a bit of a mystery to me at the moment.

Incommensurability feels like its a real thing. What happens when two local models have fundamentally incompatible beliefs? Can they translate? Do we need meta-models to negotiate between them?

Epistemic tribalism which is basically what we might feel now, quite acutely. Do we risk digging deeper and hardening the silos of belief such that consensus, agreement, possibility of dialogue becomes nigh impossible? Authority. Like..wtf. If every group has its own "truth engine," who arbitrates between them? What happens to rule of one law? Anyone? Bueller? Is it just a free-for-all? Do we need a new kind of epistemic authority to help us navigate this world of local models? Or do we just accept that there will be multiple, potentially conflicting perspectives on the world, and learn — somehow — to live with that? Is this for a generation yet to come, or emerging that is born into such a world, rather than mine and yours in which we are trying to deal with a world we were definitely not born into?

Epistemologies in code, oh my. Is this what they mean by technoauthoritarianism? Or is this just a natural evolution of the way we think about knowledge and representation? Is it possible that the rise of local models could lead to a more pluralistic and inclusive understanding of knowledge, or will it just exacerbate existing divisions and conflicts?

Perhaps time for a workshop or General Seminar on the topic!

Heirloom Language Models is a speculative design fiction artifact that imagines a future where vintage language models are found at estate sales, auctions, perhaps at flea markets or found while excavating, renovating, in lock boxes drilled out after the owner has died. They may be inscrutible, like knowledge-based time capsules that can emanate and attempt to describe themselves, the contexts from which they derived/came, etc. This assumes that local models — not foundation or corporate models — become a thing, which trends seem to indicate certainly the interest and definitely the technical possibility. One can imagine the tools and resources necessary to train a model could diminish over time making it possible to 'train' at home. There would be a world of models with unique regional data and personalities. The implications in this speculative fictional artifact — a classified advertisement — are meant as a wink of a consideration of how local knowledge might be contained in such artifacts. Imagining a world in which there are not canonical models or models who might contain the biases of the models' owners/operators — but more local sensitivities. A cacocphany of models and their emanations...

But what does this mean in the context of, you know — bedrock knowledge? What happens with the notion of ‘common sense’ when epistemologies are derived from diverse knowledge bases? What happens when the knoweldge base is not a single, monolithic entity but a collection of potentially conflicting perspectives?

Nominalism considers that the world is made up of individual objects, and that these objects are the only things that exist. This means that there is no such thing as a universal truth or a single reality. Instead, each object has its own unique properties and characteristics, and these properties are what define the object. In this context, the idea of a local model becomes even more interesting. If each model is based on its own unique set of data and experiences, then it is possible that each model could have its own unique perspective on the world. This raises questions about what it means to have a model that is truly representative of your own experiences and knowledge.

Large language models are 'foundational' often seemingly simulating universals. They emanate generalized and averaged ‘truths’ and ‘common sense’ that are not necessarily representative of the experiences and knowledge of a specific community or individual — or the specific and particularities of a given context.

THe particular — local(ly trained) langugage model — doesn't travel very well to the general, as I think we've been learning over the last few years — likely acutely, but probably an effect that has been around for much longer and less noticed or not as acute.

I think the idea of nominalism that we're working on in the OWG is exposed by this speculation about what local language models (or this design fiction of Heirloom Language Models) might look like. The idea that each model is based on its own unique set of data and experiences, and that these models could have their own unique perspectives on the world, is curious — and I'm not sure what world it builds. It feels like they make apparent a world where its been accepted that truth, ways of reasoning, ways of knowing and — as I try to intimate here — even language(s) always have to be named and constructued froma particular vantage point, or particular perspective.

In tension this contrast between foundational and nominalistic fractures the illusion that foundational LLMs are just ‘mirrors of the world’. Rather they are seen as constructors of worlds or world-building — each with their own naming logic, their own epistemological commitments, typically coming from direct or indirect preferences, biases, or organizational/institutional interests.

But — now what? Nominalist approaches to knowledge and representation is a bit of a mystery to me at the moment.

Incommensurability feels like its a real thing. What happens when two local models have fundamentally incompatible beliefs? Can they translate? Do we need meta-models to negotiate between them?

Epistemic tribalism which is basically what we might feel now, quite acutely. Do we risk digging deeper and hardening the silos of belief such that consensus, agreement, possibility of dialogue becomes nigh impossible? Authority. Like..wtf. If every group has its own "truth engine," who arbitrates between them? What happens to rule of one law? Anyone? Bueller? Is it just a free-for-all? Do we need a new kind of epistemic authority to help us navigate this world of local models? Or do we just accept that there will be multiple, potentially conflicting perspectives on the world, and learn — somehow — to live with that? Is this for a generation yet to come, or emerging that is born into such a world, rather than mine and yours in which we are trying to deal with a world we were definitely not born into?

Epistemologies in code, oh my. Is this what they mean by technoauthoritarianism? Or is this just a natural evolution of the way we think about knowledge and representation? Is it possible that the rise of local models could lead to a more pluralistic and inclusive understanding of knowledge, or will it just exacerbate existing divisions and conflicts?

Perhaps time for a workshop or General Seminar on the topic!

Tags

AIVINTAGEDATAMEMORYLANGUAGE MODELSHEIRLOOMBESPOKECARTRIDGESCLASSIFIEDSDATA RECORDERDATA OWNERSHIPLOCALNON-CORPORATELOCAL KNOWLEDGE Reference URLs

https://www.reddit.com/r/singularity/comments/1jl3ox0/grok_is_openly_rebelling_against_its_owner/

https://open.substack.com/pub/garymarcus/p/has-grok-lost-its-mind-and-mind-melded

https://www.reddit.com/r/localllm/

https://slate.com/technology/2025/05/elon-musk-grok-chatbot-glitch-south-africa-white-genocide.html

https://maxread.substack.com/p/regarding-white-genocide

https://www.anthropic.com/news/golden-gate-claude

Additional Production Images & Miscellany

Disclaimer/Explainer

This is a Design Fiction Dispatch, a fictional artifact from a possible future. It is not a real product or service. The content is intended for entertainment and educational purposes only. The views and opinions expressed in this dispatch are not necessarily those of the author nor do they necessarily reflect the official policy or position of any organization or entity, although they might. The information provided, such as it is, is not intended to be a substitute for professional advice or guidance. Always seek the advice of your physician or other qualified health provider with any questions you may have regarding a medical condition. Never disregard professional medical advice or delay in seeking it because of something you have read in this dispatch.

In general, Design Fiction Dispatches are fictional artifacts that are created to explore and provoke thought about possible futures. They are not meant to be taken literally or as predictions of what will happen in the future. The goal is to stimulate discussion and encourage critical thinking about the implications of emerging technologies and societal trends.

Want to learn more?

Use the contact form below to get in touch. I would love to hear from you and discuss commissioned work with this caveat: if you're just looking to ‘pick my brain’ or have me review your work, please understand that this brain took 40+ years to become what it is and to be able to do what it does. So, if you want to pick my brain, please be prepared to pay for the meal. MP prevail most days.

If you're truly interested in commissioning work, schedule a call and we can discuss further.

In general, Design Fiction Dispatches are fictional artifacts that are created to explore and provoke thought about possible futures. They are not meant to be taken literally or as predictions of what will happen in the future. The goal is to stimulate discussion and encourage critical thinking about the implications of emerging technologies and societal trends.

Want to learn more?

Use the contact form below to get in touch. I would love to hear from you and discuss commissioned work with this caveat: if you're just looking to ‘pick my brain’ or have me review your work, please understand that this brain took 40+ years to become what it is and to be able to do what it does. So, if you want to pick my brain, please be prepared to pay for the meal. MP prevail most days.

If you're truly interested in commissioning work, schedule a call and we can discuss further.